This is a quick opinion post on Microsoft’s unveiling of PowerShell script support for installing and uninstalling Intune Win32 applications.

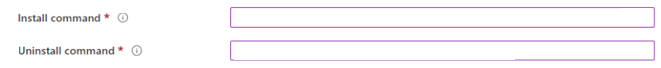

Traditionally, we specified an install and uninstall command line when configuring Intune Win32 applications:

We might specify a command line similar to:

powershell.exe -executionpolicy bypass -file .\deploy-application.ps1

Easy enough, right?

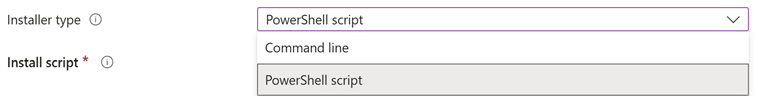

But nowadays, tenants with PowerShell script support for installing and uninstalling Intune Win32 Apps will see that there is another option when deploying Win32 apps in Intune – “PowerShell script”:

This new option allows administrators to paste a chunk of PowerShell code into the Intune console to install and uninstall applications, as opposed to just specifying a command line that points to a PS1 script inside the compiled and encrypted .intunewin payload.

But i don’t see the point of it. And I don’t necessarily like it.

Microsoft mentioned support for PowerShell scripts in Intune Win32 back in September 2025, and there is now a more recent article that explains when to use the PowerShell script option as opposed to the command line option.

To quote the article:

- Your app requires prerequisite validation before installation

- You need to perform configuration changes alongside app installation

- The installation process requires conditional logic

- Post-installation actions are needed (like registry modifications or service configuration)

Uh? So in other words, absolutely no benefits from calling a PS1 script from within the .intunewin. What’s more, these scripts (stored in the application metadata) are limited to 50KB in size?

I’ve read other blog posts on this new feature, explaining why this new functionality is “advantageous” over the command line option. Reasons such as:

- “Script changes no longer require a full rebuild and reupload of the app.”

- “Reduced overhead of testing, repackaging and updating.”

- “Difficult to see code when it’s compiled inside an Intunewin.”

- “Can now see the code directly in the Intune portal.”

And I still don’t get it.

To expand on the first two bullets (which made me slightly sick in my mouth when I read them), what we’re essentially advocating here is making changes on the fly in the Intune console without the application being version controlled, and correctly tested and documented. So in other words, this new feature endorses wild-west application management – something which I hate.

And on the last two bullets – surely we all store the original package/source code in an uncompressed, pre-compiled, version-controlled, secure environment with sufficient data retention policies in place?

At the moment, all I see with this new feature is a license for cowboy administrators to make undocumented changes on-the-fly. When in reality, if the install or uninstall script changes, we should then be releasing a new version of the package, regardless of how small the change is. Which shouldn’t be too arduous, considering a large part of our application packaging process is automated? (isn’t it?)